Detecting bots and getting rid of them

As an extension to the article “Banning impolite bots (1)” here are some additional ideas for banning bots.

One simple approach to get rid of bots (or other malicious actors) is to detect them as soon as possible and prevent their access. Although some botnets play as a real team- with highly specialized dedicated functions, general bot prevention leads to lower advanced bot attempts. So for example one bot checks the IP address and the access to it. If it finds that there is web server – it tries to get the basic web page type (IIS/Apache/NGINX/something else as server, if it user index.html or .php) and then forwards to another bot to check the phpMyAdmin/Wordpress/etc. If the page is for webcam- the data is forwarded to another bot. Sometimes after a IP is blocked the next bot continues from where the first one left. Or the bots are load balancing-alternating the requests between several bots.

There are many clever and creative botnets.

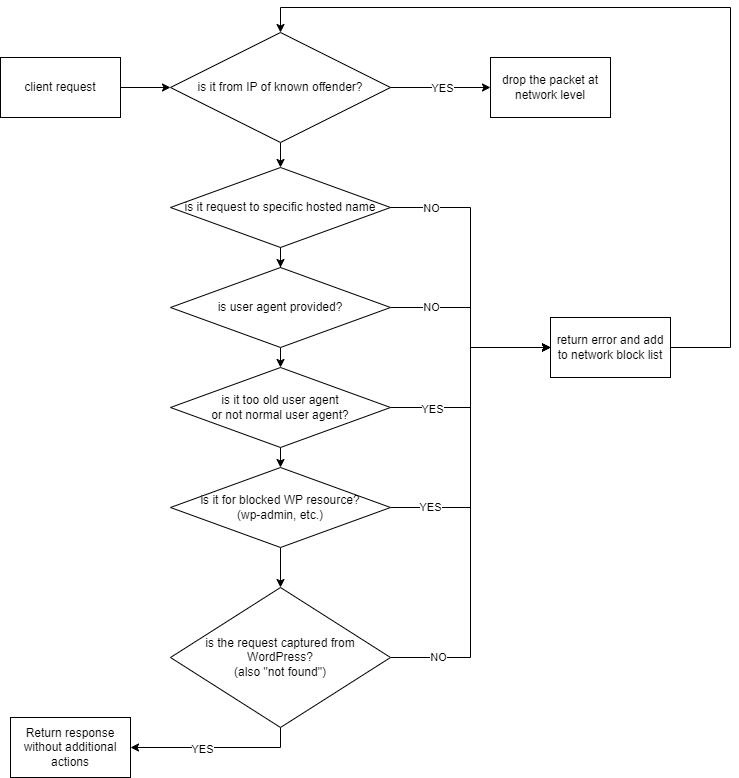

So simplest approach is to have “default” web server answering the IP requests. If a client does not know which host it is targeting, then usually there is no need to ask further. This behaviour contradicts the intended logic of DNS A and CNAME records- having some readable (usually) name to be translated to IP address. If there is a hosting provider with hunderts of different web pages served by the same IP… the IP request is like going to a scyscraper or another large building without having exact location or what to search.

As the real users can not know in advance which IP to search, but know the page name, which might have changed the hosting provider several times, but the page address remains the same, soo…. IP requests get served once but ignored for future requests. If the server has to perform handshake, the the other part of the line already knows that there is someone that picked up (auto responder, a person or the cat), so there is not big advantage to attempt to block from first attempt, but blocking second and further attempts is quite ok.

This can be done by redirecting all to specific PHP script. Done at Apache settings level (virtualhost configuration)..

The second criteria is if the user agent that is provided is valid.

If user agent is not provided, then the server does not know the client capabilities (javascript, mobile/desktop, etc.). Although most browsers have similar capabilities, it is best practice to send the user agent string. Some of the low quality bots/actors do not update the user agent. Why would a normal user use a browser that is 3-4 years old, when the newer browser works better? Or why would a normal user have outdated OS, when the old is not supported and the new does not have additional requirements?

Also the normal users that use zgrab, curl, wget, phyton, go-http, etc. are usually not real users, so also their access gets blocked to this web page.

This is done using Apache’s mod_rewrite for server or via .htaccess.

WordPress has some well known addresses, that the normal users don’t use. For example wp-admin is only for admins and users, but is not required when the page does not accept user logins.

Do the users know about xml-rpc or cgi-bin? Or what is the structure of the files? Probably the users have no idea, so they will not access them and whoever attempts to access them is ninja that has to be left outside.

Wordpres captures some of the errors. For example the most common “not found”. If he Apache “not found” is started, then there is something wrong. So such users also get blocked. This is done by selecting custom error pages in the Apache settings.

So the IPs of the “nothing to do here” requests are gathered in a file that is processed once per minute and then added to the iptables drop rules. It is not instant, but is probably frequent enough.

A diagram of the block decision follows. Some of the artions are done in several steps and some ar bundled in single step. so it is a simple guidance.