The web server benchmarking is on another page.

The web page benchmarking is influenced by the server performance, used framework, connection speed and latency. In addition it depends on what device it is loaded- small screen or large screen (lazy load of pictures or different media tags for example), if it is loaded in browser with modern features or on old with missing features, if advertisement is loaded or ads are user blocked. If it is full load, time to interactive or first paint. Are multiple domains used or single. The user speed perception is also influenced by the use of distractors (progress bar, spinning donut, splash screen, etc.). Another also important factor is the caching- is this first visit or most of the files are already cached (also the DNS caching).

So this is really complicated and is like comparing apples to oranges…. and then to pineapples… and then to lettuces.

So the simplest is to check a small plain HTML page without pictures and with inlined CSS and JS.

A simple page can be several kB in size while still having meaningful information of thousends of characters. Fast transfer, fast rendering, but not visually appealing.

Next is HTML with external CSS and external JS, buth without graphics.

The CSS files can get big and hard to read, but they rearly get bigger than a small JPEG picture. The scripts can on the other side get bigger. For example jQuery is 90kB minified and almost 300kB without minification. But this is still less than high resolution photo or a MP3 song.

The external CSS and JS are intended to be reused so only the first load they will require downloads. So even if their size is not neglectable, a single transfer for several web pages makes the size neglectable.

Page with pictures (sometimes lots of pictures).

The pictures can take significant part of the page file size, but for some of them the file size does not really matter if they are reused multiple times. If the pictures are not reused, then thumbnails can be used and the high resolution pictures to be loaded on demand. This is especially important for picture galeries with hunderts of pictures.

There are also different picture formats with their own advantages, but the most commonly used are JPG and PNG. GIF is getting rare in favor of the increasing use of SVG pictures. “ASCII art” is art and no longer necessity.

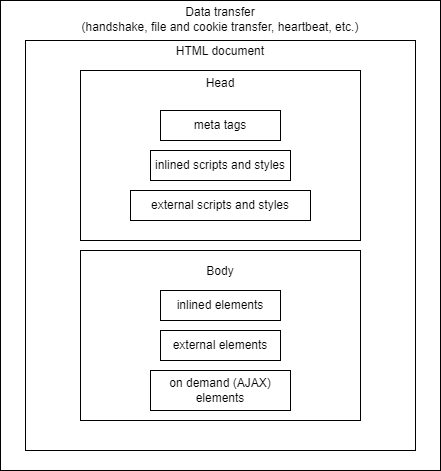

So first the server connection(s) is established and the main file is requested, the server can create the content on the fly or serve static content, then the client downloads the main file and afterwards this file is processed.

The <head></head> section is not user visible and has only browser instructions. It is the first thing to process, so if there is lot to process, this will delay the “present to user” process. Usually the reusable CSS styles and scripts are declared there and it is expected that they are usually cached (except the first page load). But some “critical” scrypts and styles are inlined in the head, so they are there and no aditional requests to server is required.

Some pages have several external JavaScript files and several CSS files and usually their size is much bigger than the actual HTML size. If a page is not changed, then the transfer size for second load can be as low as 500 Bytes and this is including the external JS and CSS, so their size is often completely neglected. Even if the page content is changed or navigation inside the page is performed, the JS and CSS are usually not changed.

Lot of the page elements are repeating and this includes many of the pictures (logos/icons, headers, footers, etc.), so their size is also usually not concidered.

The on demand elements can be slow, but are loaded usually when there is demand.

For example a page has text and multiple pictures. The initial screen is most important for the user experience. So if the menu and some text is loaded (usually pretty fast due to the reused components), the impact of loading pictures at later stage is neglectable and is performed only if the user stays on the page.

The pictures can different depending on the device resolution- different picture files can be loaded on different resolutions. It is not just different quality, but can be also completely different picture. this can be done using @media rules. It works similar to the different button and container sizes, but for images.

The above conciderations are mostly from content size and type point of view, but there is also waterfall of the content load.

One of the extreme examples of a sports brand web page- 7 MB that took 30 seconds for initial page load (cache was disabled and network throtled to fast 3G) until the animation started

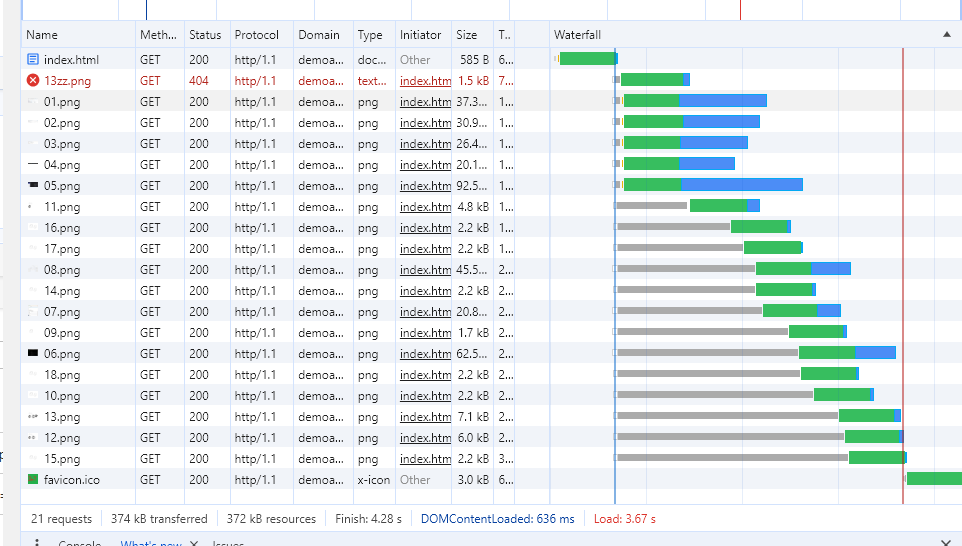

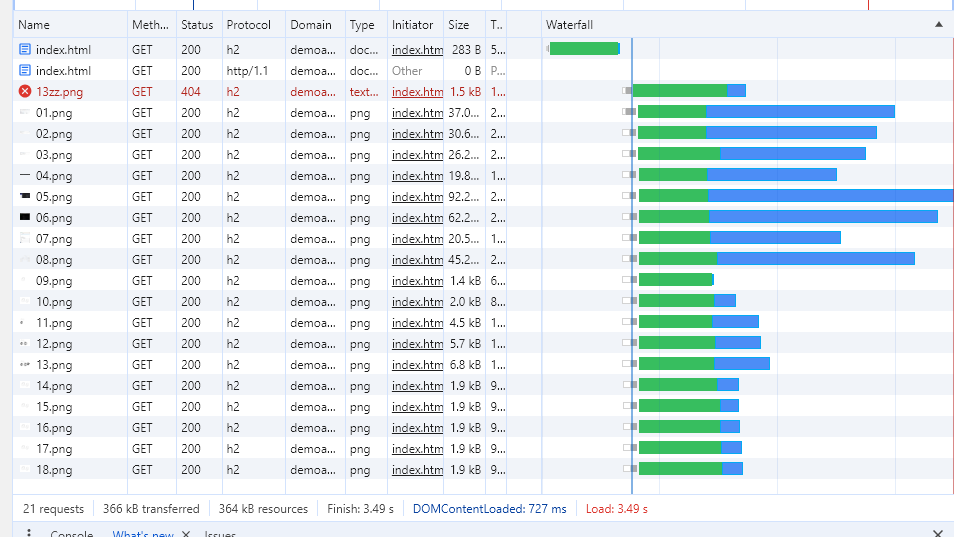

Moost modern web pages are delivered via HTTP2 (h2) and this means that one connection is used for multiple requests. So if a page has 20 pictures- all are downloaded at the same time by sharing the connection.

If for example the SSLEngine is disabled in Apache, then the connections are performed via HTTP1.1- multiple connections and the maximum connections per domain rule is still applied.

Following 2 pictures ilustrate this behaviour. Although it is rare to use the domain sharding in the last years (probably last 10 years), this can be used to separate the content between servers and/or domains. For example pictures via one domain and other files via another (www and non-www are still counted as two diferent domains).

As the total time for the page download is nearly the same- it is usualy not worth to overcomplicate the setup especially for low trafic web pages.

For high trafic web pages the spliting between domains is still practiced. For example one high performance server generates the content, another provides the media files, another is used for analytics (heartbeat, visible content, hovering over pictures/links, etc.) or one domain forgeneral page, another for shop, another for forum.

For large and high trafic web pages the servers might be separated by location (continent or country).

Back in the dark ages, when the internet was much slower and the connections and server resources were much more limited, another technique was used to increase the speed- image sharding. It was basically putting multiple pictures in one file and then using CSS to show just predefined part of the picture. It was commonly used for icons and animations from multiple pictures. W3Schools has nice demo and explanation. As this was not restricted to pictures, it was used also for sound files. Another benefit was that this ensured that all of the sprites were from same bundle (same color scheme, decorations or something else).

The page load is usually measured by the search pages and advertisement pages. Fast page load means better user experience and less influence from the additional advertisement components. There are also web pages from which the page speed can be checked and some of those functionalities are also in the web browsers.

Another metric is the browser memory usage- less memory usage means more concurent tabs. Some people have 10-20 or even more tabs opened. If each tab takes 40MB then more pages can be active at the same time without delays. But if the pages take 200MB, then less pages can be loaded into the memory. Some pages can take up to 1GB (yes. 1000MB, but this is after letting the page be active for long time) and having 10 such pages will consume lot of memory, some people blame the browsers for the high memory usage.

Cached content is not downloaded again over the network, but it still takes storage space on the device. The fast content load does not mean that the page will be very responsive, so the devices performance and storage is increased to satisfy the user expectations.

How big is one book saved as plain thext file? May be 1 or 2 MB. A web page less than 1MB is not common anymore. So the meaningful content of the web pages has drastically decreased. As a comparison “school essay word count” can be searched in internet and then compared to a random small picture.

As a “real life data”:

- 10kB – this article;

- 120kB – initial page load without the pictures;

- 400kB- with loaded pictures;

- 700kB- expanded;

But with enabled cache and previous load, this page requires just 400 bytes to be checked if there are updates.

Is it woth to have 130kB pictures (the waterfall examples above)? 130kb is still small and probably later might be updated with better file format or placing vector graphics instead of bitmap picture, but currently there is no need to do this.