The web pages are great way for providing information, but their existence provides information that there is a server behind the host name (and the IP) and this server is accepting externally initiated communication and is able to communicate with external systems. Well, this is the purpose of the web pages.

The web server provides this information also to different parties that are usually not interested in the information on the server but are interested in using the server for their own purposes. Those parties can be called with different names, divided by hat color, government, private companies, individuals, by country, etc., but the purpose is frequently the same- use the server for own profit by selling connectivity information, on/offline status, data harvesting, server infesting, whatever possible. Controlling someone else’s server is like free storage, compute power and network connectivity and as bonus- the responsibility is of the server owner. So infested servers can be used for further web scanning, (D)DOS, simple flooding, advertisement activity faking, whatever.

So this server is set up to block (actually silently drop) the incoming connections of remote addresses that behaved improperly at least once. It is currently done in a rather lame way- the default error pages are redirected to PHP script that gets the remote address and adds it to a blocked list that is passed to iptables that actually drops the further connections. Currently there are around 2000 (clered the list, so number is different) different IPs with improper remote client behavior such as:

- not reporting any “user agent” or reporting too old one;

- reporting bot or script user agent;

- attempting to get the WP-Admin page;

- attempting to open non existing page and bypassing the default WP error pages;

- IP address access only (bypassing DNS and not providing SNI);

As some of the IPs come from consequent IPs or tightly grouped IPs, it is obvious that they belong to one organization. In this case the IPs are grouped to subnets and blocked. Not always the best approach as it might block “innocents”, but bad neighborhood.

As I think it is too basic approach and does not reveal something secret, the current approach is:

Add ErrorDocument in the page config that points to the PHP file. For example adding “ErrorDocument 500 /get_blocked.php” in the sites-enabled files in the Apache server.

In the PHP file add check if the IP ($_SERVER[‘REMOTE_ADDR’]) is whitelisted. If whitelisted, the obviously do not block it. If it is not whitelisted, then check for user agent ($_SERVER[‘HTTP_USER_AGENT’]) and if it is in the to block list, just block it. Many bots are still using obsolete User-Agent strings. There are not many real end users using browsers that were released several years ago and have at least 50 newer versions.

Check if the requested page is in the to block list. For example WP-Admin, admin, login, phpMyAdmin, mysql, etc. Why someone, without authorization, will try to open such pages? Simply add the IP to a list to block later and stop dealing with it.

Create cron job to start a script that checks the list of IPs to block. The script processes the list and then empties the “trash bin” waiting for new content. As the script is running with elevated permissions (required for iptables), the input is sanitized again.

As the cron script does not run very frequently, sometimes one IP lands several times in the list. As this just increases the iptables with useless rules, the list is sometimes manually purged from duplicates and reloaded.

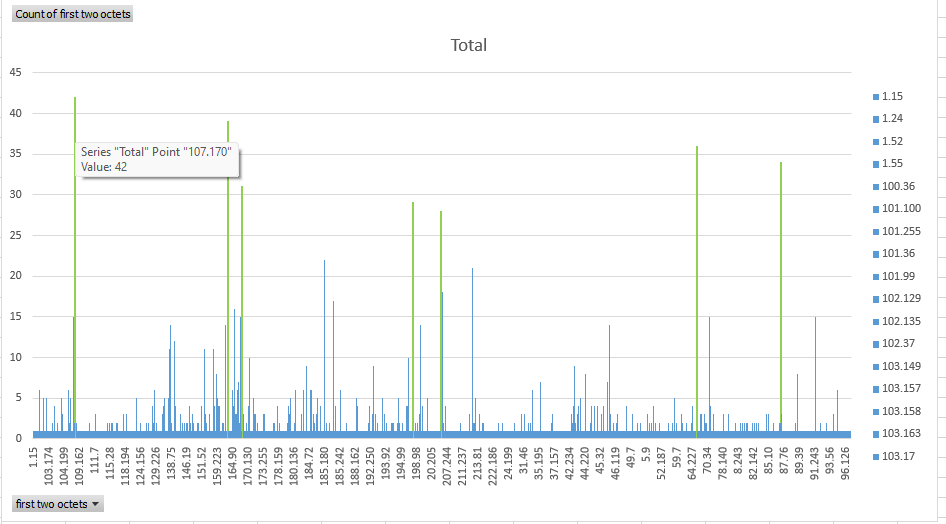

Finding the related IPs is also done in a lame way- the IPs are imported in a spreadsheet application and pivot table is created based on the first two octets (example picture follows). The related remote addresses appear as spikes on the graph.

If there are 20 IPs to block with difference only within the last octet, then… sorry, but all get blocked. Or 50 with difference in the last two octets- from 2000 blocked entries having 50 of them from the same area without relation is improbable. So there are 5-6 subnets that are blocked.

There are even bots that are running on the public cloud providers infrastructures or working behind VPN. Obviously some people manage to expand the business and make it less shady.

Another method to determine who to block is to get the DNS entries- iptables -L -v replies by default with the hosts. Well in this case it does not return the IP so a possible workaround is to export the blocked entries with DNS host name and again with IP. Matching the two lists by rule number creates a dictionary IP-Host name.

Some of the bigger bot nets have proper DNS entries. This is the advantage of being a little shady and having enough resources to manifest with this activity and even make money from it (selling the scan reports to whoever pays) or doing it as free public service (because someone sponsored it and wants do get it cheaper by presenting it as open source community initiative).

Anyway… bots trying to access content before reading the robots.txt file are not welcome. The ones checking for obviously not publicly accessible content (logins for example) are also not welcome. The ones without providing User-Agent or some ancient version are also not welcome.